EXPERIENCE DESIGN

HUMAN MACHINE INTERFACE

DESIGN RESEARCH

AutoVision

All vector graphics courtesy of Adam Whitney and Cynthia Zhou

Renders Courtesy of Adam Whitney

Spring 2024 Studio Project Sponsored by Cognizant

Collaborators:

Leila Kazemzadeh

Adam Whitney

Cynthia Zhou

Instructor:

Noah Posner

Role:

Interviews & Data Collection

Research Synthesis

User Testing + Protocol

After-effects Animations

Initial Concept Rendering

Introduction

IN THE YEAR…

Driver roles will be redefined by autonomous vehicles.

Currently, drivers are vital for operating vehicles and managing safety and legal responsibilities. However, as autonomous technology advances, the role of the driver will diminish. Despite that, AVs will eventually be far safer and more effective drivers than we are today, they've always had difficulty doing one thing, which is dealing with human error.

PROBLEM

The pedestrian experience has been overlooked with the advent of autonomous vehicles. During our user research, we had many interviewees expressing that regardless of any improvement to AV technology, they were far more worried about being in the vicinity of a driverless car, than they were about a distracted human driver. This presents us with a key contradiction.

Human drivers can be inexperienced, inattentive, or under influence, but as AV technology matures, it will behave more predictably and safely than humans. Despite all this, there are no clear ways for pedestrians to understand the AVs intent in the way they would with a human driver, leading to fear and uncertainty. This adds to the poor perception of AVs.

DESIGN OPPORTUNITY…

Promote safe pedestrian behavior, by creating trust between the pedestrian and the vehicle through the communication of vehicle intent via an implicit interaction that supports pedestrian behavior rather than dictating it.

Concept

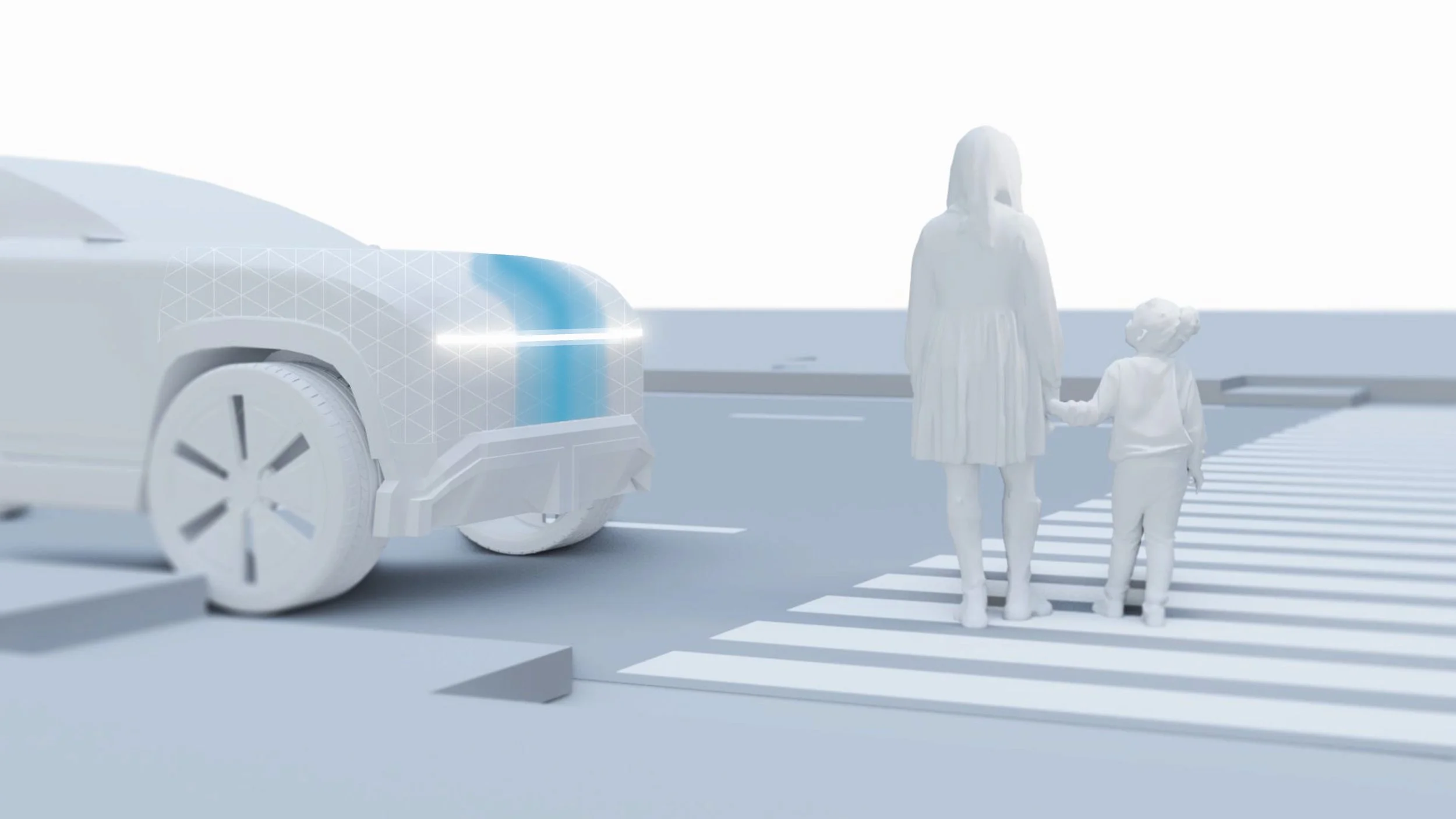

AutoVision is an external human-machine interface for autonomous vehicles. It bridges the perception gap between vehicle and pedestrian as the driver is no longer present. It is multi-modal, with a visual as well as an audio element. It functions well in a variety of scenarios, as seen in the video below:

Video courtesy of Adam Whitney

TECH SPECIFICATIONS

AutoVision utilizes pre-existing e-ink technology from the BMW iVision Dee for its visual interface. The scales map across the surface of the vehicle in customizable configurations. Additionally, the scales have light piping which allows the animations to be front lit. This prevents the visuals from being too bright but still allows for visibility in low-light scenarios.

The audio portion of the interface integrates into the car horn system, playing an indicator which is half the frequency of a car horn. This prevents it from being overwhelming and getting confused with the actual car horn.

IMPLEMENTATION

The business approach would be to centralize the production, sell the parts to car manufacturers for their specific vehicles, and give manufacturers the ability to customize it aesthetically to certain extents based on their needs. The color ways would be based on a contrast ratio of greater than 3:1 for different colored vehicle.

STYLE GUIDE

The system allows manufacturers to apply the polygons to fit the unique contours of their vehicles so long as they cover the hotspots identified during testing. Additionally, the colors for the indicator animation can be selected by the manufacturer so long as they meet the contrast ratio greater than 3:1.

Design Process Overview

PRELIMINARY RESEARCH + AEIOU

Since our prompt was very broad, we first identified the stakeholders and activities to contextualize the space. After an initial, very rough, literature review, we decided to conduct an AEIOU observation of manually or semi-autonomous electric vehicles when their owners go to charge them. This led us to identifying that drivers retain a sense of responsibility for their vehicle at the current stage of automation.

Additionally, our secondary research helped us understand more about pedestrian behavior and what kinds of interfaces have been studied to aid in the communication gap.

INTERVIEWS

To bolster our secondary research, we conducted interviews with a diverse range of people, including those who walk to commute, as well as first responders to understand their experiences and perceptions with autonomous vehicles. Our interviewees were generally distrustful toward AV technology as a whole.

We had interviewees claim that regardless of any improvement to AV technology, they were more worried about being in the vicinity of a driverless car than they were about being near a distracted human driver. This draws to an important finding from our secondary research: pedestrian’s fear of autonomous vehicles can increase their tendency to move dangerously or unpredictably in front of a moving vehicle. This is especially among children.

AFFINITY MAP

PROTOTYPICAL ROLES + MINDSETS

Affinity mapping led us to identify some key stakeholders that we saw opportunity with within the end-user experience. We assigned some prototypical mindsets and roles for these stakeholders to help contextualize them within the system.

Pedestrians became the target user in this project because of the lack of robust design considerations for their emotional journey, and lean into the untapped potential to make autonomous vehicles more widely accepted. A lot of the risk factors and pain points that are felt by pedestrians in their interactions with autonomous vehicles are within urban environments/cities.

EMOTIONAL JOURNEY MAP

We mapped the emotional journey of a pedestrian crossing the street and identified many pain points faced by the pedestrian, such as the lack of consideration that drivers tend to have, leading to somewhat of a distrust of cars as a whole.

HIERARCHY

Our solution draws from this hierarchical framework to address the needs of our key user group—the pedestrian. The goal of our design is to first and foremost promote safe pedestrian behavior by encouraging trust between the pedestrian and AV. We want to create that trust by having the car communicate its intention, through an implicit interaction, allowing us to support and inform pedestrian behavior, rather than dictate it.

ASPIRATIONAL USER JOURNEY

PRELIMINARY CONCEPT

IDEATION

TESTING OBJECTIVES

HOTSPOT IDENTIFICATION

In order to determine where on the AV our interface would make the most impact, we conducted a test in which users acted as pedestrians, crossing in front of and beside a moving vehicle and recorded where their gazes most often rested. Identifying visual hotspots allowed us to place the technology at specific locations that make the most impact to reduce overall costs and material use. The investigation involved seven testers, two scenarios, and five rounds of testing.

Simulate Driverless Future AV Scenarios: Tracing paper was used to cover the front of the vehicle in a way that obscured the driver from the users while still allowing the driver visibility.

Eye Tracking Glasses: Users wore these glasses throughout the test in order to track and record the direction of their gaze as they crossed in front of the car.

HOTSPOT RESULTS

We found that users primarily looked at the hood, grill, and above the front tire on the side where they started crossing, regardless of whether they were crossing in front of the car or walking beside it.

Drawing courtesy of Leila Kazemzadeh

ANIMATION TESTING

Various animations with different sizes, dynamism, and directionality were tested with users via a paper car prototype with a projector on the inside. The projector mapped our animations on the outside of the car with the help of the program, Madmapper, allowing us to act out a crossing scenarios that included our animated signifiers with users in order to determine which were the most effective.

Animation Sample

MADMAPPER Animation Software

Projector Setup inside “AV”

AUDIO TESTING

Audio indicators were tested similarly to the animations, using a paper car and speaker which we used to act out pedestrian crossing scenarios with users. We observed how our “pedestrians” responded to various sound patterns and how they interpreted the signals to determine the most appropriate audio cues for our interface at each of it’s stages.

Testing Setup

Audio Testing States

INDICATOR TESTING RESULTS

The audio and visual testing gave us insights on how we could iterate on our design and improve upon the experience.

REVISED MINDSETS

Our main observations led to the creation of two specific pedestrian mindsets, the cautious and pedestrian first mindset. This led us to revise some of our prior mindsets and design our solution based on the new ones.

FINAL DESIGN DECISIONS